Summary

For more than 1,700 years, Saint Nicholas has spread compassion, gifts, and the Christmas spirit around the globe. Better known as “Santa Claus”, today he commands an enterprise with global reach, operating a true factory of dreams on a scale that would astound any logistics and production expert.

But everyone has limits, even a man with supernatural powers and aided by a legion of elves. Recognizing that he needed help to overcome challenges and keep the magic of Christmas alive, the jolly old man turned to technology. More specifically, to computer vision.

With this, he managed to optimize processes, expedite deliveries, and improve working conditions for himself and his helpers. In this case study, we show how computer vision saved Christmas.

The Challenge

The main challenge faced by Nicholas is the scale of his operation; after all, things have changed a lot in 17 centuries. When it all began, around the year 300, the world had 200 million inhabitants. Today, we are more than 8 billion, of which almost 2 billion are children under 14, according to UN data.

Imagine receiving orders, organizing production lines, and planning the distribution of gifts (which must be done in a single night!) for an entire planet. Not to mention the need for management of production spaces, cleaning, security, and everything else that is part of a modern industrial operation.

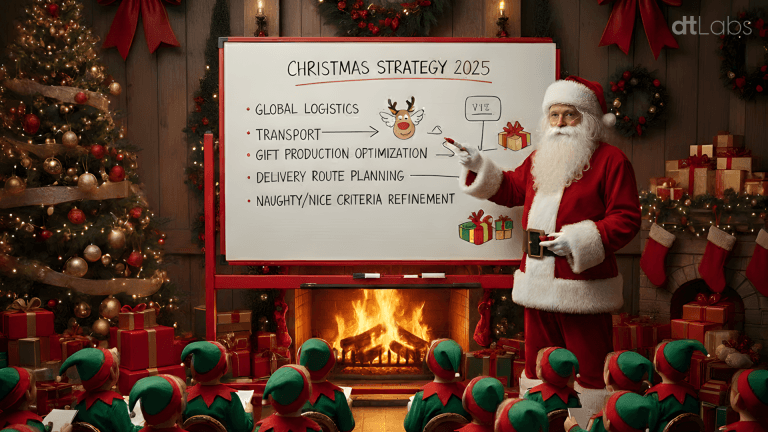

The Solution

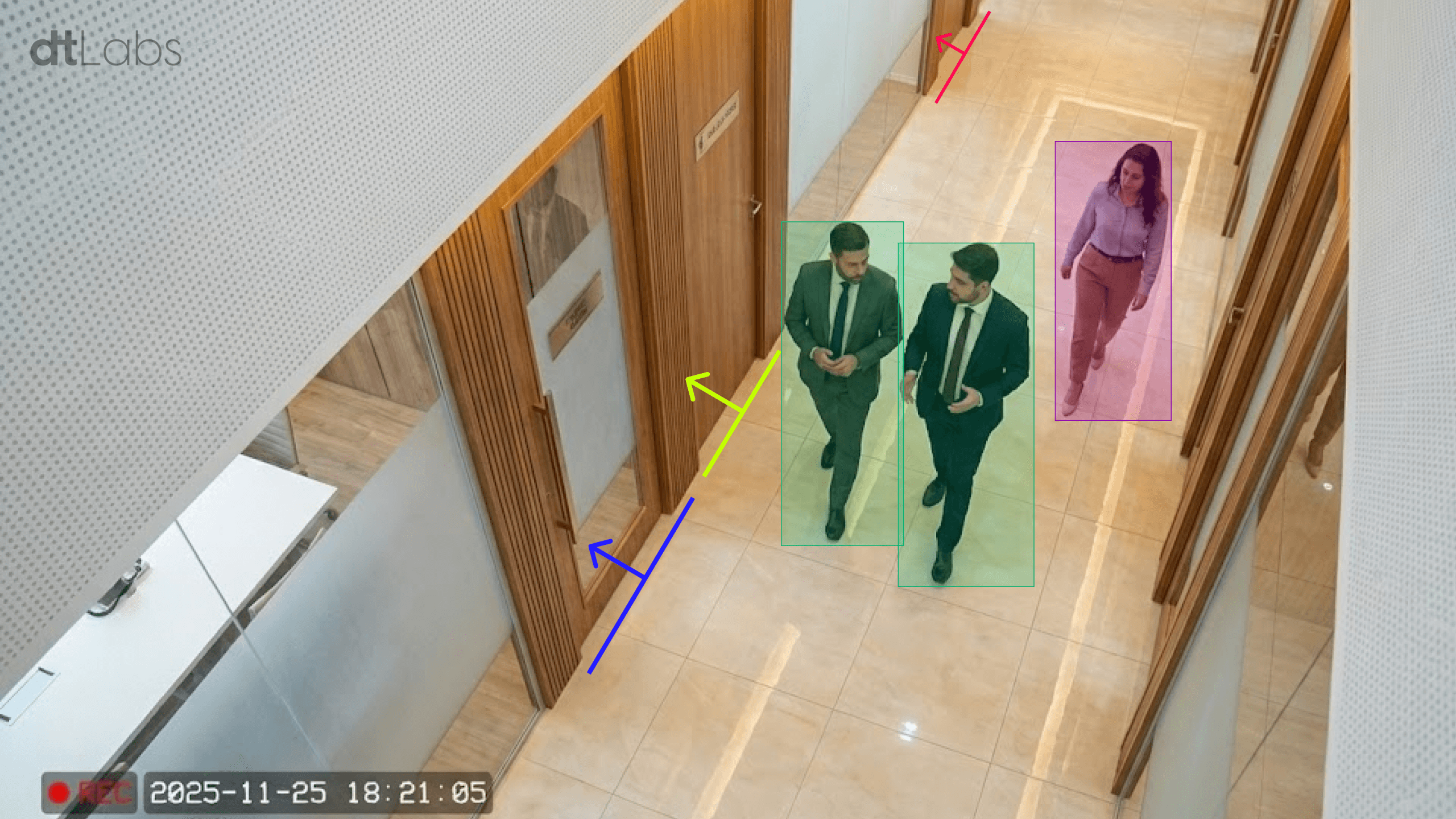

Computer vision was implemented in all stages of the factory’s production and operation process, a complex of thousands of square meters hidden among the pine forests of Lapland, in northern Finland, inside the Arctic Circle (or “North Pole,” as the region is known in popular folklore).

Starting with factory access control. This used to be done with manual “bage checks,” which caused long queues and a lot of frustration among the elves, as it was difficult to differentiate between them. After all, they are all the same height, have rosy cheeks, and wear identical clothes. Today, thanks to facial recognition technology, the queues have disappeared.

On the factory floor, the safety of the helpers is fundamental. Computer vision is used to ensure that the elves are correctly using their personal protective equipment (such as pointy hats and scarves), respecting safety zones, and avoiding encounters with heavy machinery, such as forklifts.

On the production line, we have a curious mix of “old” and “new.” Due to technological advancements, Santa Claus cannot produce all types of gifts desired by children (PlayStations are still exclusive to Sony), but the factory still handles the most traditional toys, such as balls, cars, and dolls.

They are produced by artisan elves, with the same care as hundreds of years ago, supervised by master elves, each specialized in one type of toy.

Here, computer vision is used in a sophisticated quality control system, which uses customized artificial intelligence models. Ready-made toys are placed on conveyor belts, where cameras quickly identify and separate items that are out of standard, and send them to workshops where they receive the necessary repairs. Approved items go to the packaging sector, where they are wrapped in traditional Christmas-themed gift paper and colorful ribbons.

With the gifts ready, it’s time to load the sleigh, which is no easy feat. The organization of the gifts, a technique perfected over many centuries, must consider the route, to ensure that the right item is at hand at the exact moment, and that the jolly old man doesn’t waste time looking for the presents.

Fortunately, due to the Earth’s rotation and time zones, Santa Claus has about 34 hours to complete his deliveries. Even so, to meet his schedule, he needs to visit thousands of homes every second. This means absolute control over the departure time is necessary.

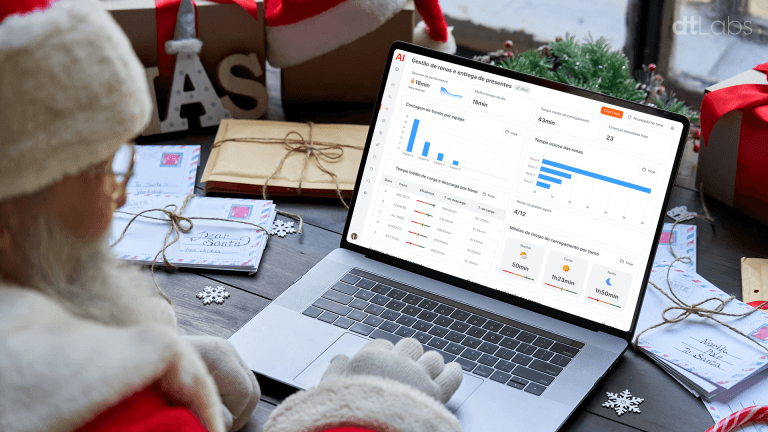

For this, a set of cameras feeds computer vision models trained to identify the sleigh’s wagons and gift packages. Combined with machine learning algorithms trained with operational data, these models allow the creation of a “digital twin” of the sleigh during loading.

Dashboards monitor metrics such as total loading time, maneuvering time, elf idleness, and number of wagons loaded per hour, and provide precise estimates of each wagon’s occupancy and the departure time.

Computer vision is also used in other operational aspects of Santa Claus’s workshop. An intelligent surveillance system, with loitering and intrusion detection, monitors the perimeter to prevent the Grinch from spoiling Christmas.

Even facilities management is simplified, with automated alerts for bathroom cleaning, avoiding rigid schedules that occupy the team whether cleaning is necessary or not. Furthermore, room climate control is adjusted according to use, which gives the team more comfort and reduces energy consumption.

The Results

The implementation of computer vision in the factory brought clear positive results for Santa Claus and his helpers. With the gain in productivity in the process, they can take more days off between Christmas and the start of preparations for the next year.

The number of errors decreased, and team morale, along with working conditions, improved. What once seemed a daunting task is now performed with precision, every year, without fail, and with leeway for the next billion children.

Next Steps

Santa Claus is now studying the implementation of other systems in the factory to further optimize operations. For example, volumetric calculation to monitor the stock of hay for the reindeer during the winter. After all, they need to be very well-fed to face a trip around the world.

And the satisfaction is so great that word-of-mouth advertising is taking effect: the Easter Bunny has already requested consultancy, looking to optimize chocolate egg production.