Summary

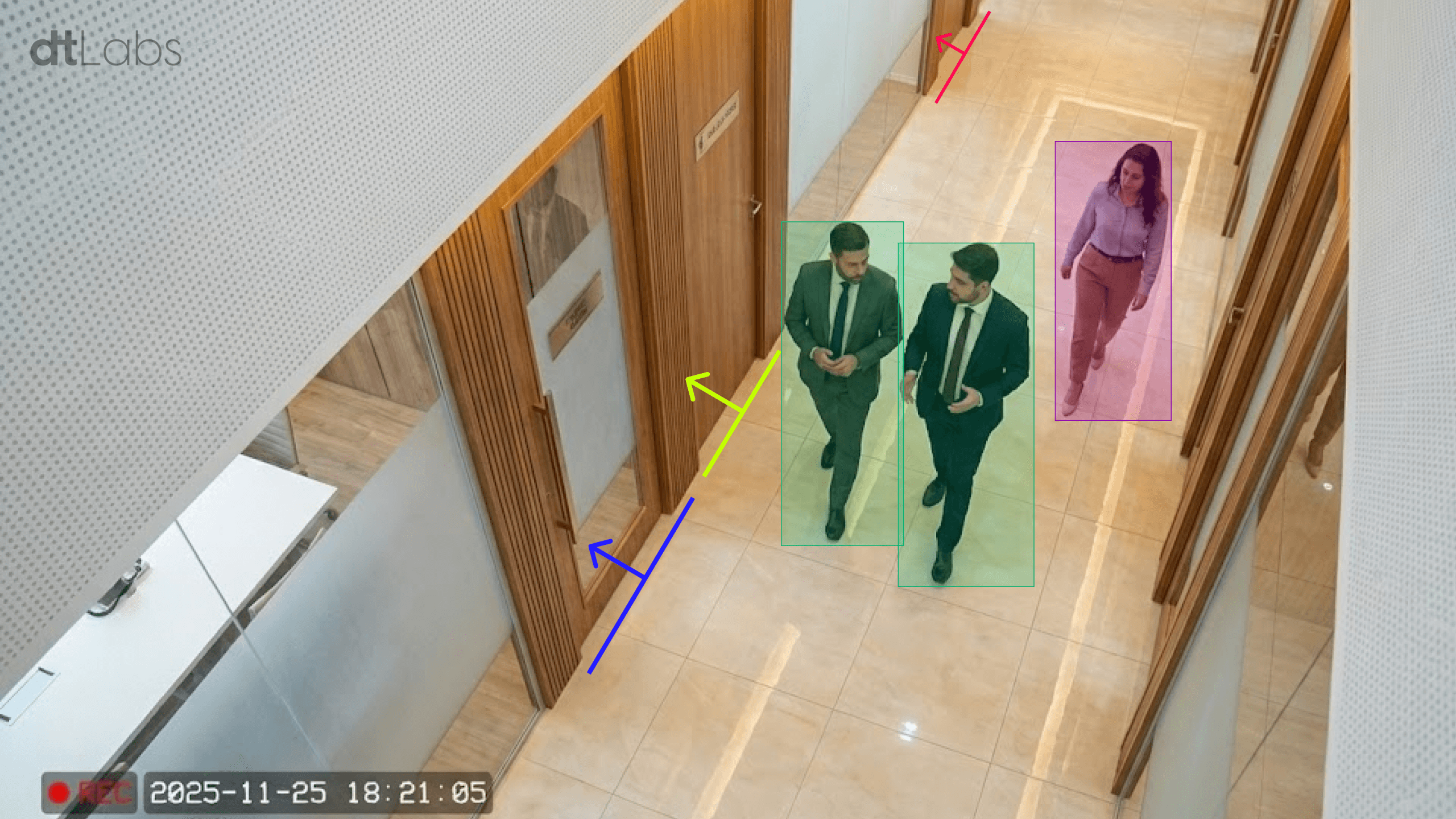

Every field has its own jargon, and the world of computer vision is no different. Terms like Megapixels (MP), Pixels Per Meter (PPM), Frames Per Second (FPS), and angles of incidence are frequently used but not always fully understood.

This article aims to unravel these concepts, explaining what they are, how they work, and their importance when evaluating the quality and suitability of a camera for different computer vision solutions.

Over the next few sections, we’ll explore each of these terms in detail, debunking the myth that “more megapixels is always better” and highlighting the crucial factors for a successful monitoring system.

What Are Megapixels?

Like cross-stitch embroidery, digital elements are composed of many small elements grouped together. The smallest element of a digital image, equivalent to a single stitch, is the Pixel.

The size of an image is usually expressed as two numbers: the amount of pixels horizontally (width) and the amount of pixels vertically (height). For example, a “Full HD” image is 1,920 pixels wide by 1,080 pixels high.

The resolution of the image is the product of these two numbers. In our example, the resolution is 2 million, 73 thousand, and 600 pixels. To simplify the representation of this number, we use the term Megapixel (abbreviated as MP), which stands for 1 million pixels. In other words, we can say that a Full HD image has just over 2 Megapixels.

Higher resolution allows for the capture of more detail, and in the early days of digital photography, image resolution increased dramatically with each new generation of cameras and sensors.

The difference in quality between a 0.3 MP image (common on early camera phones) and a 3 MP image (common on digital cameras of the same era) was noticeable, especially when the images were enlarged or printed. Therefore, it became a common belief among users that the more megapixels an image has, the better the quality.

But while more megapixels allow for more detail capture, they’re not the only determining factor in quality. It also depends on the quality of the sensor, lens, and camera processing, among many other factors. Megapixels are just one element of the equation.

What Are Pixels per Meter?

In monitoring systems, the concept of “pixels per meter” (PPM) is more important than an absolute measurement of resolution in Megapixels (MP). It indicates how many image pixels are needed to cover a 1-meter-long object in the observed scene.

The higher the PPM, the more detail the camera can capture. And the more detail, the easier it is to identify features like faces, license plates, or text. It is important to note that this measurement is relative, and varies according to camera resolution and the distance between the object and the lens.

Higher-resolution cameras have more pixels to distribute across an image area, increasing the PPM at the same distance. However, the further away the object, the lower the PPM.

The figure above illustrates this concept well. Both the license plate and the traffic lights in the background are the same width, about 30 cm. However, because the license plate is closer to the camera, it occupies an area 313 pixels wide, compared to 83 pixels for the traffic lights.

Using a simple rule of three, we can calculate that a 1-meter-long object at the plate’s distance would have 1,043 pixels, compared to just 276 pixels for the traffic light. In this example, the same camera has, at the distance from the plate, a PPM almost four times higher than at the distance from the traffic lights.

Additionally, it’s worth remembering that different AI models have different PPM requirements. For example, a facial recognition model might have a minimum requirement of 250 PPM. But if all you need is to detect whether a person is in the scene, 50 PPM might be enough.

In short, it is the PPM, not megapixels, the determining factor in whether a camera is suitable for a computer vision solution.

What Are Angles of Incidence?

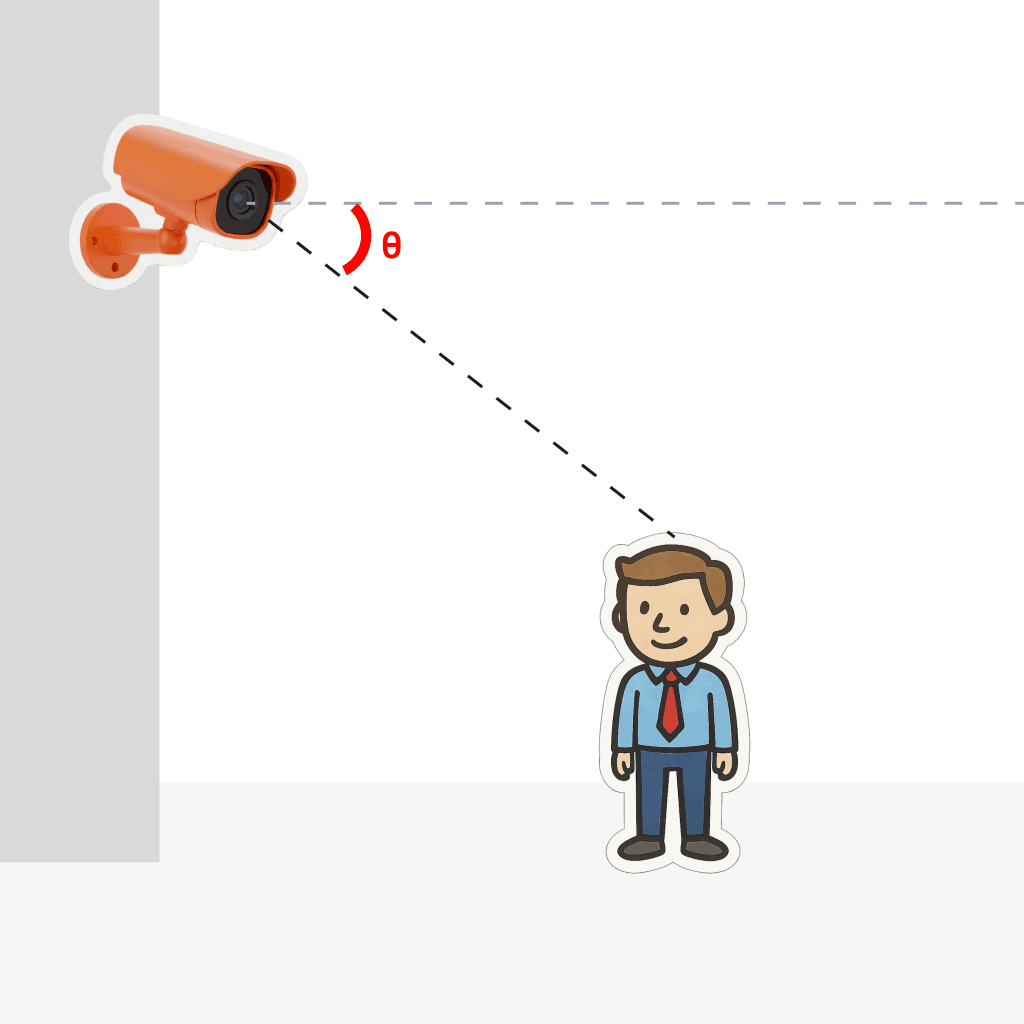

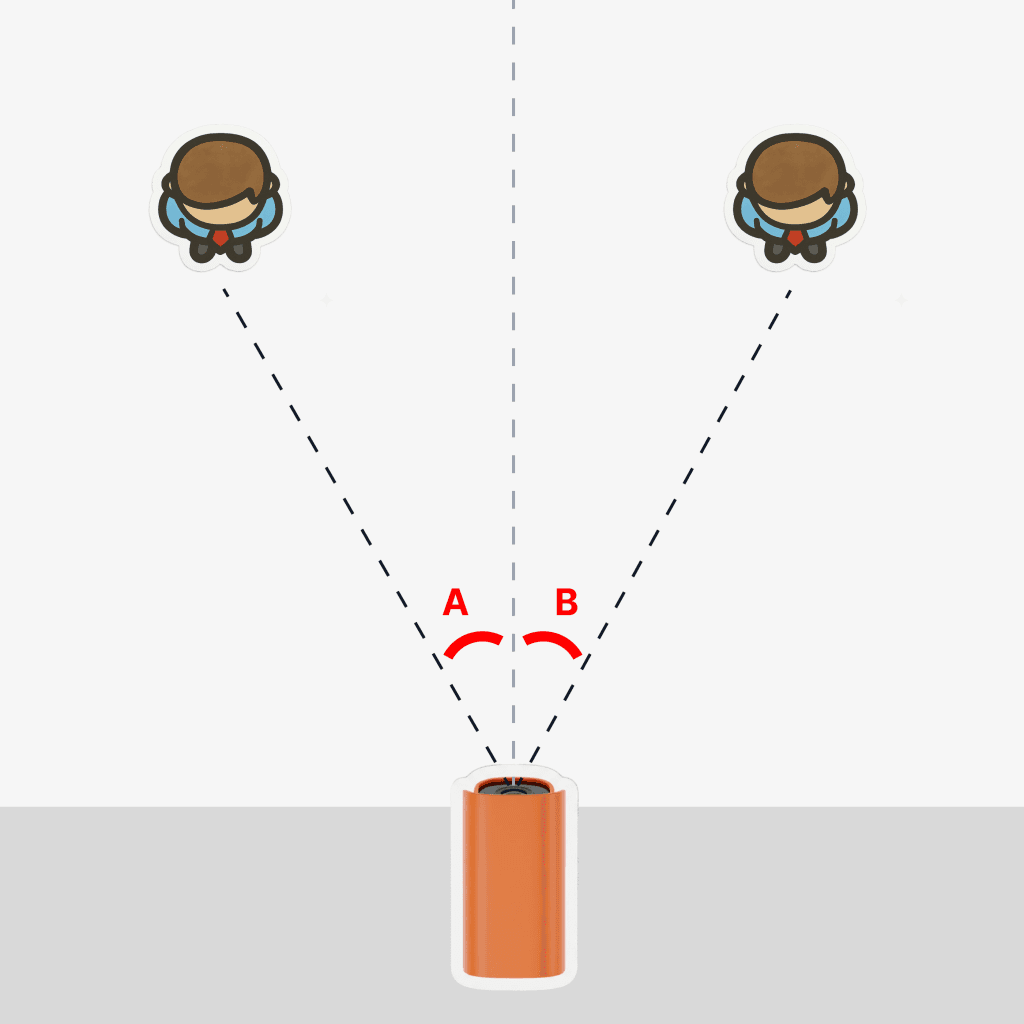

Angles of incidence are another important concept in computer vision. The term refers to the angle formed between the camera lens and the object being observed. There are two angles we should be concerned with.

The vertical angle of incidence refers to the angle of the camera relative to the ceiling, as indicated by the greek letter Theta θ in the figure below.

The horizontal angle of incidence refers to the angle of the camera in relation to the object being observed, using the direction of the camera as a reference. In the figure below, we can see that the objects (people) are at angles a and b, respectively, relative to the direction of the camera. These are horizontal angles of incidence.

As with PPM, different AI models have different requirements regarding the angle of incidence. Extreme angles can cause image distortions, making object recognition difficult or even impossible. Correct camera positioning in relation to objects can be crucial for increasing detection accuracy.

What Are FPS?

Another term commonly used in computer vision is FPS, (Frames Per Second), which originated in the film industry. Videos are nothing more than sequences of static images (frames) displayed in a short interval of time. The more frames, the smoother the sensation of movement. Movies made for the cinema, for example, typically have 24 frames per second.

But in computer vision, we can use the term differently, referring to how many frames will be processed in one second, regardless of the frame rate of the original video.

Again, different AI algorithms have different FPS requirements depending on the use case. In static or low-motion scenes, such as facial recognition or detecting people in an environment, a frame rate of 5 FPS may be more than sufficient. In fast-paced scenes, such as reading license plates of moving vehicles on a highway, a higher FPS, such as 12 FPS, might be required.

It may be tempting to set a high FPS, so the solution won’t “miss anything,” but we recommend caution. The higher the FPS, the more processing power is required to analyze the images, and the higher the power consumption of the equipment performing the analysis. However, the return, in terms of detection effectiveness, will likely be small or non-existent.

As with PPM and incidence angles, different AI models have different FPS requirements. When in doubt, we recommend starting with the minimum value suggested and gradually increasing it if necessary until the desired results are achieved.

Recomendações para o AIOS

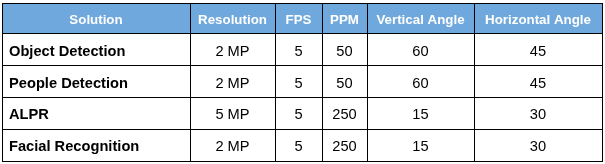

As mentioned, each AI solution or model has its own requirements for the parameters mentioned above. In the case of AIOS, our Edge AI and computer vision system, we can make some general recommendations based on common use cases.

As for resolution, a 2 MP sensor is sufficient for detecting people, vehicles, objects, or facial recognition. For reading license plates (ALPR, Automated License Plate Reading), we recommend at least 5 MP.

50 PPM is sufficient for detecting objects, vehicles, or people. More advanced solutions, such as facial recognition and license plate recognition (ALPR), require at least 250 PPM.

For detection of objects, machinery (such as forklifts), and persons, the maximum vertical and horizontal incidence angles are 60 and 45 degrees, respectively. For ALPR and facial recognition, the angles are 15 degrees (vertical) and 30 degrees (horizontal).

Finally, we have the frame rate. In all the solutions we mentioned, 5 FPS is sufficient.

See the summary table below. If you have any questions, please consult the AIOS documentation for more information.

Conclusion

As we’ve shown, several parameters influence the performance of a computer vision solution, and understanding the meaning and importance of each is crucial when choosing components and implementing them. Choose wisely, and the results, and your customers’ satisfaction, will be guaranteed.